NOTE: This post assumes you are acquainted with first and second order optimisation techniques

Table of contents

Open Table of contents

Motivation

Optimisation is probably the most important part for Deep learning. The main problems are the curse of dimensionality and irregular cost function topologies. Everything would have been so perfect if either one of these wouldn’t exist. SGD has become so popular, with the hope that, the stochastic noise added helps escaping the local optima and saddle points. But still this doesn’t work everytime.

Second order optimisers such as L-BFGS, CG and other quasi newton methods work very well in lower dimensions but they fail in the higher dimension because of the memory and computational growth is exponential w.r.t increase in dimension.

This paper proposes a new faster method - curve ball addressing the issues of 2nd order optimisers

Optimisation Methods

Let’s quickly review the standard optimisation problem and the methods to solve it

Optimisation Problem

A usual machine learning problem involves, Finding an optimum which minimises (or max) the loss function , Objective may also be constrained. But we could add a penalty function and make it unconstrained, So let’s focus only on this:

And is defined as:

Here, is the input data point. So, in short we need to find the global minima of the function

Gradient Descent

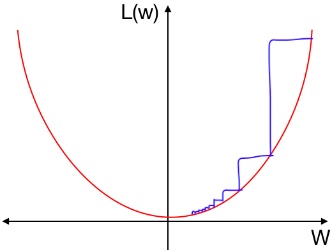

I think this image best explains the Gradient Descent. The idea is to take the step in the direction of the gradient. The update rule for the iterate would be:

is the learning rate or the step size at time t. Note that this update rule is discretisation of the ode:

It’s usually difficult to evaluate this term everytime (over all dataset).

So we approximate the term as:

and this gives us Stochastic Gradient Descent (actually mini-batch). Which is a noisy discretisation of above ode:

The convergence is noisy but it may or may not get stuck at local minima and saddle points

Newton’s Method

This is actually a root finding method for a function f. Since, minima is the root of we can use this update rule to find out:

We can think of this as newton’s method provides a value for the step size parameter :

For vector we have:

H, J are hessian and jacobian (of ) respectively

FMAD and RMAD

Work in progress

To-Do

- FMAD

- RMAD

- Algorithm

- References and image ref

- Grammar check